Welcome to the Conversation Lab page!

In our lab, we are currently working on various projects related to cognitive processes of language and conversation. To read more about our published studies, click here.

If you are interested in becoming a graduate student in the lab, please click here.

If you are interested in undergraduate research experience positions in the lab, click here and fill out the application form. Opportunities for independent research projects are also available if you have worked in my lab for at least 2 consecutive semesters, and you approach me well in advance.

Recent Events:

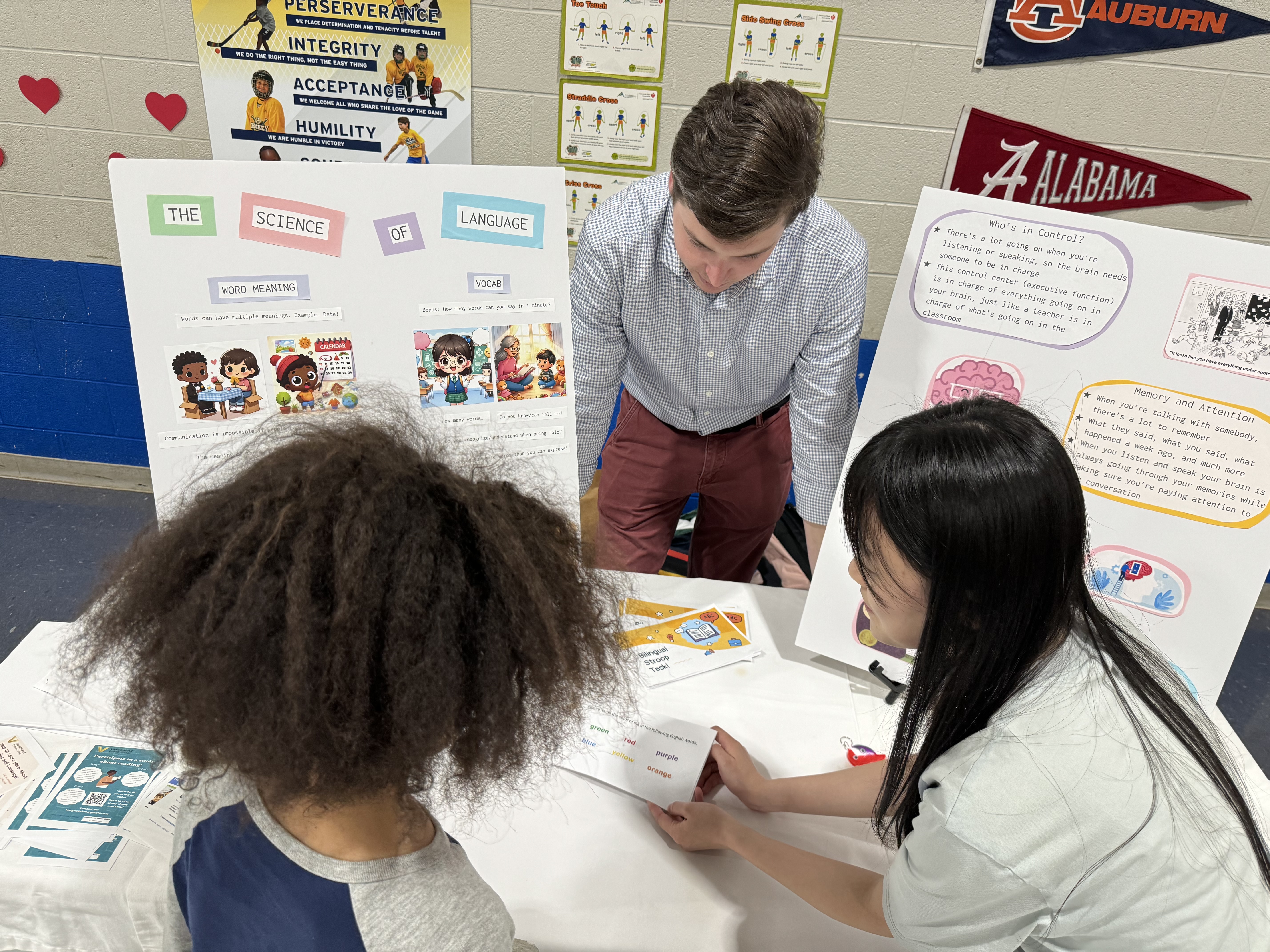

Patrick Sherlock and Zoey Zhou talking to students at Glendale Elementary School.

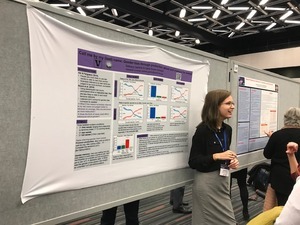

Bethany Gardner at Psychonomics 2019 in Montreal.

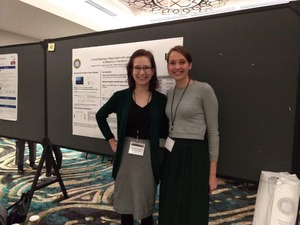

Bethany Gardner (left) and Evgeniia Diachek (right) at CUNY 2019 in Boulder, CO.

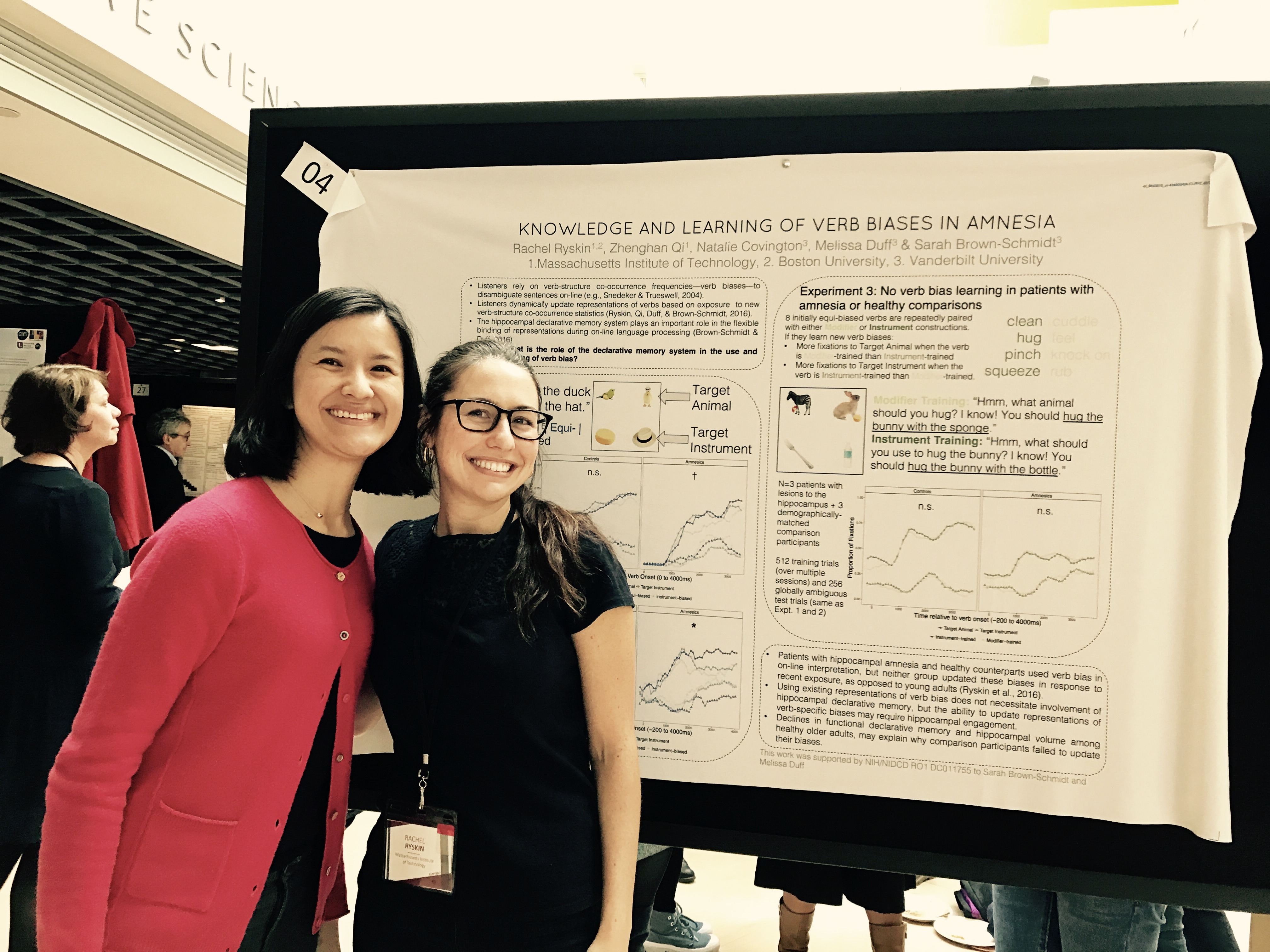

Rachel Ryskin and Zhenghan Qi at CUNY 2017

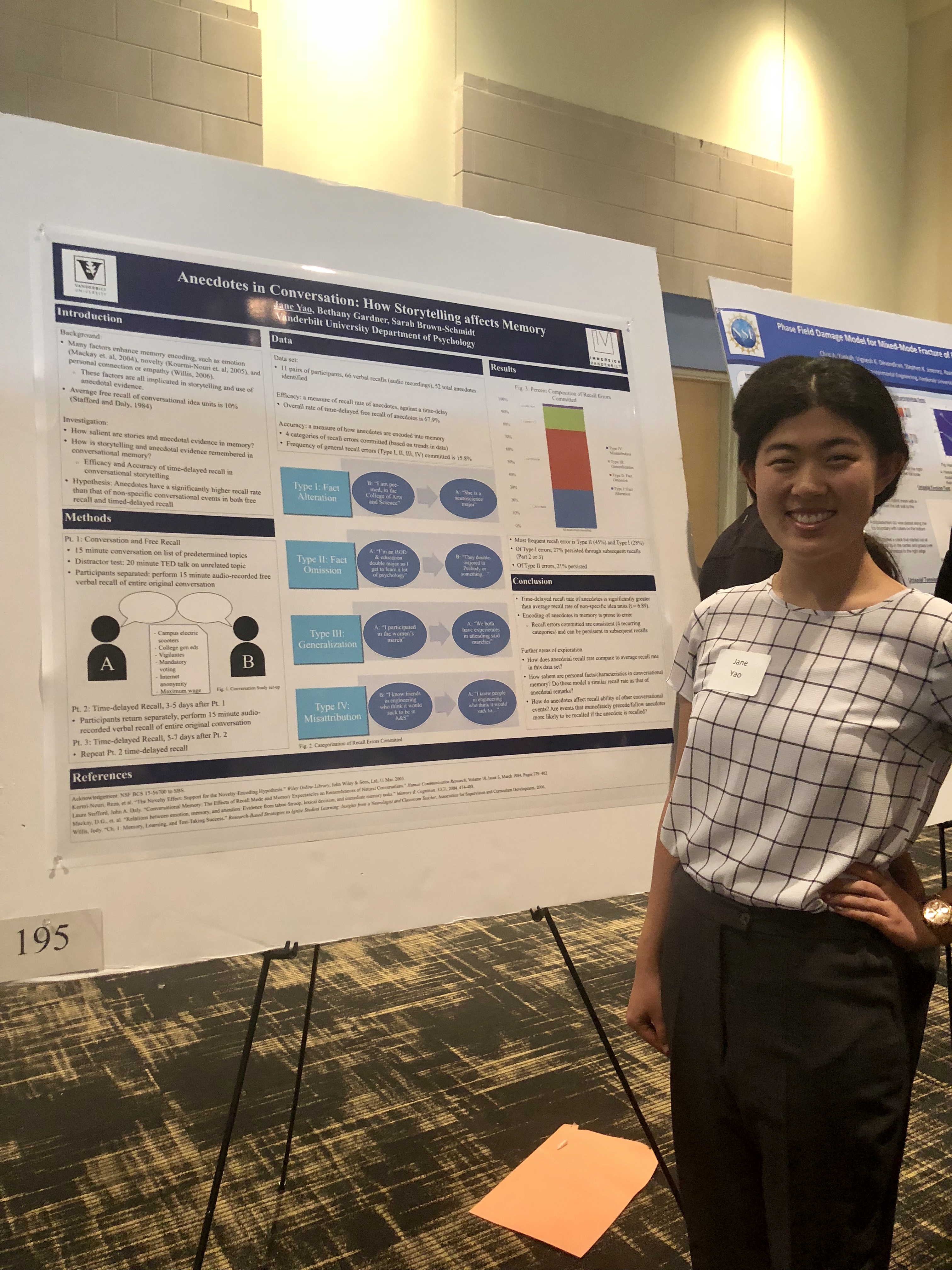

Jane Yao at the 2019 Vanderbilt Undergraduate Research Fair

Jane Yao at the 2019 Vanderbilt Undergraduate Research Fair

Meet Our Lab Members:

Dr. Sarah Brown-Schmidt (director)

In my professional life, I am a Professor in the Department of Psychology & Human Development at Vanderbilt University. My research focuses on the mechanisms by which people produce and understand utterances during the most basic form of language use: interactive conversation. I am currently pursuing three questions in related lines of research: Common ground and perspective taking, memory and language, and message formulation. For more information on my research content, visit my Professional Page.

Patrick Sherlock (Lab Manager)

Patrick Sherlock (Lab Manager)

I graduated from the University of Southern California in 2023 with a BS in neuroscience and a minor in lingusitics. My undergraduate research was in both neuroanatomy and psycholingusitics.

I am interested in understanding how language is represented in the brain neurocognitively, including in cases of multilingualism, injury, and disorder.

Melissa Evans (PhD Student)

I'm a second-year PhD student in Psychological Sciences at Vanderbilt University, working with Sarah Brown-Schmidt. I graduated from Butler University in 2020 with a B.A. in Psychology and Critical Communications. There I did research in behavioral neuroscience with Dr. Jennifer Berry.

I'm interested in a large variety of concepts involving language comprehension and conversations.

Caitlin Volante (PhD Student)

Caitlin Volante (PhD Student)

I am a first year PhD Student in Psychological Sciences working with Dr. Sarah Brown-Schmidt. I received my B.S. in Neuroscience from Florida State University. While there, I worked with Dr. Michael Kaschak, focusing my honors thesis project on the effects that gender, race, and social status have on the perception of high rising terminals.

I am interested in how different aspects of language and conversation change from typical to atypical populations.

Alumni:

Bethany Gardner (Ph.D. 2023)

Evgeniia Diachek (Ph.D 2023)

Anna McKay Wright (Ph.D. 2023)

Katie Lord (Clinical Research Coordinator, Massachusettes General Hospital Mood and Behavior Lab)

Jordan Zimmerman (Clinical Research Coordinator at Massachusetts General Hospital).

Craycraft (MA, 2018)

Rachel Ryskin (Ph.D. 2016, Assistant Professor, UC Merced)

Si On Yoon (Ph.D. 2016, Assistant Professor, University of Iowa)

Kate Sanders (MA, 2014)

Alison Trude (Ph.D. 2013, now at Amazon)

MATLAB code for running and analyzing experiments:

Most of our experiments are run using MATLAB along with the Psychophysics Toolbox (PTB-3), Brainard, 1997; Pelli, 1997. PTB-3 has what used to be known as the Eyelink toolbox built-in; this allows us to send commands to our eye-tracker from within MATLAB. I have designed some demo code that (1) runs a visual-world eye-tracking experiment, and (2) analyzes the data in Matlab. To get started, download the zip-file here, and follow the instructions in the README file. This demo is meant to provide some basic ideas about how to get started programming in Matlab, and will most certainly have to be edited to meet your needs (to get rid of bugs, improve efficiency, etc.). I welcome any comments or feedback you have. I make no guarantees about the accuracy of the code, and guarantee there’s probably a better way to program the experiments than we’ve done here.

We use EyeLink 1000 eye-trackers to monitor the eye movements of participants as they engage in dialog tasks. Our research focuses on the mechanisms that support language processing in everyday conversation. For a description of my research interests, please click on the research link.